Apr 30, 2019 | Srivats Shankar

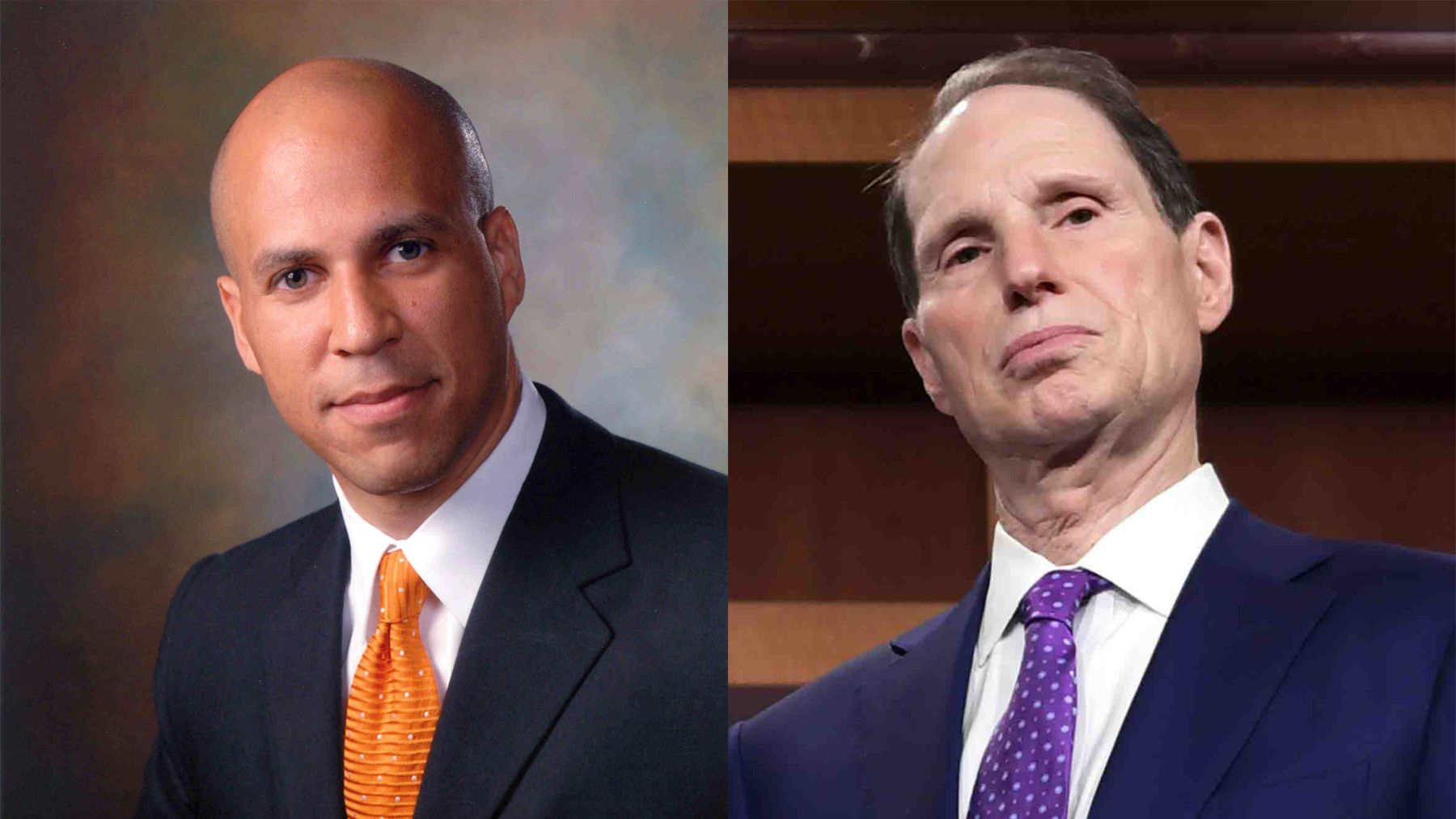

Senators Ron Wyden and Cory Booker consider the enactment of the Algorithmic Accountability Act to do away with bias in digital decision-making

Apr 30, 2019 | Srivats Shankar

Senators Ron Wyden and Cory Booker consider the enactment of the Algorithmic Accountability Act to do away with bias in digital decision-making

Developing autonomous systems is a complicated process. The rise of machine learning and deep learning has allowed for leveraging real-world data to enhance the operation of computational systems by bolstering statistical methods. They are collectively known as AI (Artificial Intelligence). The larger a data set the more likely it will be able to account for a greater number of contingencies (though not necessarily). However, as AI begins to proliferate rapidly, these data sets may not always be accurately vetted. Data sets themselves may suffer from several issues, ranging from corruption, tampering, inconsistencies, and human error.

Scientists have identified a number of paradoxes that may plague data sets. For example, the Accuracy Paradox offers a situation where the data in a given situation may pertain to a particular category and the lack of any other categories may lead to consistent false positives. This could be understood with reference to medical data, where a computational system would have to detect whether someone has a fracture based on an x-ray. If a system is trained from data only from those who have sustained fractures, without any information about those who have not then the likelihood of having false positives would be very high. Similarly, another paradox known as the Simpson’s Paradox named after the scientist Edward Simpson. This situation would account for an eventuality where there are two groups, one which performs exceptionally well in calculus while the other does not. With everything remaining the same, including, gender, race, and class, it seems almost inexplicable why there is a gap. This could be attributed to information that is not provided in the given context, say for example the age of the groups. One group could be from middle school, while the other from high school. Even though in terms of representation they embodied all categories, the lack of a specific category of information created a significant variance in the outcome.

Problems with data sets are to be expected and can no doubt be considered the cost of progress. However, in a larger context this does create an issue, where systems that may have inherently flawed data sets are used to automate processes that may inherently allow for a degree of bias. One of the most cited examples in this regard is the usage of AI to automate the process of hiring and evaluating potential candidates. Simply disregarding information like gender, race, class, and age might be insufficient, since the data set itself may be inherently flawed. There may be indicators that may identify a particular group, which might not be noted by developers – yet might be identified by the AI based on its patterns of recognition.

The deployment of these systems essentially normalize a sense of bias. As a blackbox, identifying the operation of the system is very difficult for a third party. Companies would viciously protect their intellectual property used to develop the systems. The problem is not in the development or IP, but rather the consequence that the systems have on people.

To challenge existing hierarchies of bias within these systems, US Senators Ron Wyden and Cory Booker introduced the Algorithmic Accountability Act. It essentially provides the Federal Trade Commission (FTC) to regulate any entity within its jurisdiction, provided that it has an annual gross receipt of $50 million or greater. As one of the first legislations within this context, it provides for a number of fundamental terms, including, automated decision system and high-risk decision system. Essentially, they power those systems that automatically process data, particularly personal data and that have a high risk of bias based on a set of criteria provided under section 2 (8) (B). It requires that entities carry out impact assessment that need to be submitted to the FTC. In addition to this, the FTC is also granted certain procedural powers, including enforcement mechanisms that are consistent with the Federal Trade Commission Act (15 US Ch. 2).

As AI becomes increasingly integrated with the daily lives of people, grappling with potential bias and discrimination within these systems would become an absolute necessity. Just last year seven members of the US Congress considered these very questions, by sending letters to the Federal Trade Commission, Federal Bureau of Investigation and Equal Opportunity Employment Commission. They looked at the potential of bias within the systems and whether there was a chance of fairness and accountability deteriorating as a result of these changes. A specific mention to the FTC, to include facial recognition and the potential for bias within its agenda was also considered.

To understand how AI systems work is not an easy process, leave alone the additional burdens of maintaining trade secrets and intellectual property rights. This can by no means be used as a shield to disregard the importance of diversity and equal opportunity within society. Many times the intention would not be to conduct prejudicial tasks, but rather merely due to a given set of circumstances the outcome may result as such. Such legislations though currently would be seen as a burden, should become integrated within larger discourse. Developers should actively seek to make their systems equitable and a legal framework would supplement such a workflow in the long run. There is no doubt that in the current situation it would serve as a hindrance, but as with all policy – it is better to be proactive than reactive.

Srivats Shankar | May 02, 2022

The European Parliament adopted the recommendations of the Special Committee on Artificial Intelligence in the Digital Age providing a roadmap until the year 2030 regarding its impact on climate change, healthcare, and labor relations

Srivats Shankar | Mar 26, 2022

European Union reaches political agreement to introduce Digital Markets Act.

Maathangi Hariharan | Mar 22, 2021

/diːpfeɪk/

/ˌɑːtɪfɪʃl ˈdʒɛn(ə)r(ə)l ɪnˈtelɪɡəns/

/ˌɑːtɪfɪʃl ɪnˈtelɪɡəns/